Power Analysis

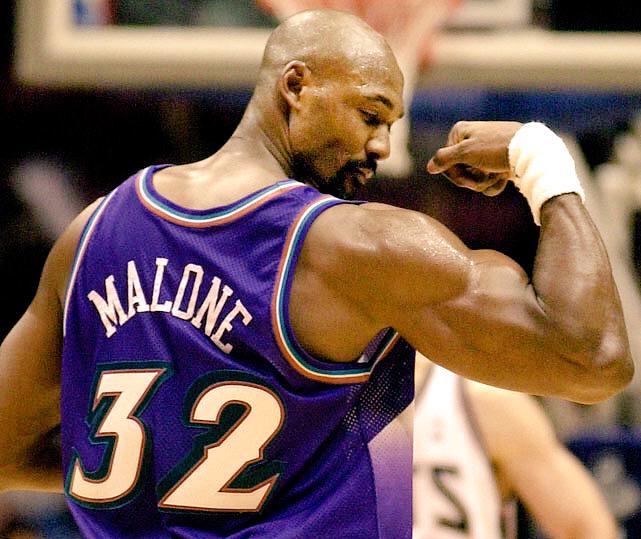

Statistical models are a lot like us: in order to become stronger, they have to first bulk up. While my daily diet of handfuls of birthday cake flavored protein powder hasn’t yet paid off in the strength department, it’s no mystery what a model needs to get stronger: data.

Power analyses can give us answers to questions of how much data we need to identify a certain size effect in an inferential model. Come with me, as we judge the proverbial statistical model Mr. Universe contest.

Understanding Power

In hypothesis testing, there are two types of errors:

- Type 1: We reject the null hypothesis when the alternative is false (false positive)

- Type 2: We fail to reject the null when the alternative is true (false negative)

We take type 1 into account through setting a designated alpha that we

compare our p-value to; this value is usually .05. Remember what the

p-value represents: it’s the probability that we see a given result (or

something more extreme) if the null hypothesis was true. Getting a

p-value below .05 doesn’t indicate that the null is not true, it’s

just very unlikely. The user can mess with alpha value as they see fit

based on how much they care about type 1 error.

So what do we have in place to prevent type 2 error? That’s where power comes into play.

If alpha was the type 1 error rate, let’s say beta is the type 2 error

rate. Power is simply 1-beta. Generally, an acceptable power value is

.8. So at this baseline value, we’d fail to reject the null when the

alternative is true 20% of the time (not great, but false negatives are

seen as less troublesome than false positives).

When we’re looking at a power analysis, there are four numbers that are all interacting:

- Effect Size

- Power (1-beta)

- Alpha

- Sample Size

How do these parameters effect power? In general, the larger the parameter, the larger the power.

- Larger effect sizes lead to increased power; it’s easier to identify larger effect sizes than smaller ones.

- Larger values of alpha lead to increased power; less stringent values to determine significant results means that we’re less likely to miss significant results.

- Larger sample sizes lead to increased power; more samples lead to more precise distributions of the test statistic, creating more distinction between the actual and hypothesized test statistic distribution.

With a power analysis, we need to know three of these parameters to calculate the fourth. The best way to increase power is to increase the sample size, but know that for most research, this can be very expensive. Rather than just sampling a crazy amount and paying out the nose for it, you can use a power analysis to calculate the minimum you’d need to reach an expected amount of power.

Now here’s a warning that a lot of people don’t really pay attention to:

try not to perform a post-hoc power analysis to calculate power required

for a test (Danial Lakens has a good blog

post

on the subject). The researcher who is always looking for a significant

result can take an experiment that has low power and use it as an excuse

for not finding a significant outcome. Use power as a set baseline value

(again, .8 is a generally accepted value) to determine what sample

size is necessary to capture the minimum effect size you or your

stakeholders deem practically significant.

Data

We’re going to go back in time and pull up the Derrick Rose and Lebron James game score data for the 2011 season. We used this back on the t-test lesson, and guess what… we’ll be using it for more t-tests! This time, though, we’ll be using a power analysis in our study.

library(tidyverse)

rose <- tibble(Player = "Rose",

GmSc = c(14, 26.4, 14.7, 22.2,

9.7, 8, 23.2, 19.7, 27.6, 19, 14.9, 21.5, 20.7,

24.5, 6, 12.6, 26.3, 7.9, 21.4, 19.3, 20.7, 13.8,

7.3, 26.4, 26.2, 15.6, 16.1, 14.9, 18.1, 16.7,

21.4, 17.2, 9.1, 22, 24.4, 25.9, 9.9, 23.2, 26.8,

22.1, 20.2, 16.6, 18.9, 18.5, 17.9, 16.5, 30.9, 5,

22, 21, 16.9, 13.6, 36.4, 23.9, 13.3, 9.9, 19.4,

4.2, 13.8, 16.7, 19, 20.8, 23.7, 16.2, 17, 13.5,

24, 16.1, 28, 14.4, 32.5, 16.6, 22.8, 23.1, 32.6,

12.9, 27.7, 6.7, 31.6, 15.9, 8.6))

james <- tibble(Player = "James",

GmSc = c(16, 9.6, 10, 15.6, 23.7,

20.6, 19.4, 22.8, 29, 17.6, 20.6, 20.6, 21.7,

15.5, 17, 18, 11.7, 19.8, 12, 33.7, 19.7, 12.5,

28.6, 21.7, 19, 11.1, 14.5, 27, 24.4, 11.2, 26.8,

33.3, 12.9, 17.6, 23.1, 28.8, 24, 20.1, 35.1, 17.4,

20.5, 30.1, 15, 34, 25.6, 22.8, 46.7, 17.2, 5.3,

39.3, 16.9, 14.5, 20.3, 17.7, 17.9, 25.5, 27, 19.7,

26.2, 20.2, 24, 26.5, 17.8, 23.8, 16.5, 12.7, 32.2,

20.5, 14.9, 28.2, 28.6, 21.9, 34.6, 26.1, 26.6, 22,

24.7, 23.2, 26.4))

Power Analysis in R

Let’s first look again at whether Derrick Rose’s average game score was significantly higher than a made up league average of 15. This is a one-sided, one-sample t-test: we are only interested in answering if Rose’s game scores were better, not worse, than the league average.

We’ll use the pwr package to do our power calculations. Install it

first and then bring it up with library().

Let’s think about how large of a sample of game scores we would need for this experiment. To calculate it, we need: effect size, power, and alpha. Power and alpha can be kept at their general values of .8 and .05. But how do we determine effect size?

The pwr package has different effect sizes for different tests laid

out by Jacob

Cohen with an

explanation of the size of each effect. We can access this with the

cohen.ES function. We should note that effect sizes aren’t one-size

fits all; it usually takes some discussion with stakeholders to identify

what size effect is practically significant.

library(pwr)

#we specify the type of test as t and the effect size as medium

cohen.ES(test = "t", size = "medium")

##

## Conventional effect size from Cohen (1982)

##

## test = t

## size = medium

## effect.size = 0.5

We can see that the medium effect size for a t-test is about 0.5.

Knowing this, we can calculate the required sample size to capture a

medium effect with power of .8 and alpha of .5 using the

pwr.t.test function.

pwr.t.test(d = .5, sig.level = .05, power = .8, type = "one.sample",

alternative = "greater")

##

## One-sample t test power calculation

##

## n = 26.13753

## d = 0.5

## sig.level = 0.05

## power = 0.8

## alternative = greater

In this function, d represents effect size, sig.level is alpha, and

power is… well, power. The type of t-test is also specified by type

and alternative. We can see that we’d need about 27 games to

identify a medium effect size. Knowing we have 81 games, let’s see

what the smallest effect size we’ll be able to identify is.

pwr.t.test(n = 81, sig.level = .05, power = .8, type = "one.sample",

alternative = "greater")

##

## One-sample t test power calculation

##

## n = 81

## d = 0.2786405

## sig.level = 0.05

## power = 0.8

## alternative = greater

With a power of .8, we’d be able to identify an effect size of about

.28. This is slightly larger than Cohen’s predefined small effect size

of .2.

Knowing this, let’s perform the t-test.

t.test(rose$GmSc, mu = 15, alternative = "greater")

##

## One Sample t-test

##

## data: rose$GmSc

## t = 4.8825, df = 80, p-value = 2.633e-06

## alternative hypothesis: true mean is greater than 15

## 95 percent confidence interval:

## 17.4552 Inf

## sample estimates:

## mean of x

## 18.72469

We get a p-value below .05, indicating that Rose had a significantly

better average game score than 15.

Now you might be wondering what exactly was the effect size here? There isn’t one direct formula to calculate it as it’s different for different tests. For t-tests, though, it is:

The numerator is pretty simple, but the denominator is a bit complicated. The denominator is the pooled standard deviation: a single standard deviation estimate for multiple groups. In the case of a one sample t-test, the denominator is just the standard deviation of the one sample, so we can calculate our effect size like so:

(mean(rose$GmSc) - 15) / sd(rose$GmSc)

## [1] 0.5425054

So we saw about a medium effect size. Based on the power analysis we ran prior to the t-test, we saw we’d be able to identify an effect this size with relatively high power.

Let’s look at the two-sample example, comparing Lebron to D-Rose.

pwr.t.test(d = .5, sig.level = .05, power = .8, type = "two.sample")

##

## Two-sample t test power calculation

##

## n = 63.76561

## d = 0.5

## sig.level = 0.05

## power = 0.8

## alternative = two.sided

##

## NOTE: n is number in *each* group

We’d need about 64 games in each group to accurately detect a medium effect size. We have enough samples to meet this from both groups.

t.test(james$GmSc, rose$GmSc, var.equal = T)

##

## Two Sample t-test

##

## data: james$GmSc and rose$GmSc

## t = 2.6911, df = 158, p-value = 0.007888

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## 0.8017541 5.2248125

## sample estimates:

## mean of x mean of y

## 21.73797 18.72469

We can see that we got a significant result; Lebron’s average game score

was higher than Rose’s average. We can estimate the effect size

manually, or use the cohen.d function from the effsize package. Here

I show both methods:

#manual method; dividing difference in means by pooled standard deviation

diff.in.means <- (mean(james$GmSc) - mean(rose$GmSc))

pooled.sd <- sqrt((((length(james$GmSc)-1)*var(james$GmSc)) +

((length(rose$GmSc)-1)*var(rose$GmSc))) /

(length(james$GmSc)+length(rose$GmSc)-2))

diff.in.means / pooled.sd

## [1] 0.4255382

#using cohen.d(); specify pooled = T

library(effsize)

cohen.d(james$GmSc, rose$GmSc, pooled = T)

##

## Cohen's d

##

## d estimate: 0.4255382 (small)

## 95 percent confidence interval:

## lower upper

## 0.1097100 0.7413664

This effect size is slightly lower than the medium effect size we had put into the sample size calculation prior to running the test.

Post-test, power is pretty much irrelevant. It represents the probability of an event that already happened: the test correctly rejecting the null when it is false. It doesn’t really matter how this smaller effect size impacted power or sample size; the results were significant.

With an understanding of power, we can now focus on both type 1 and 2 errors. Doing so helps us both adequately prepare and analyze research. A lot of researchers focus only on type 1 errors, but knowing topics associated with type 2 error, such as effect and sample size, can help us create a better, more reliable experiment.